Intro

GoldenGate replication solution allows you to capture, replicate, filter, transform, and deliver real time transactional data across Oracle or heterogeneous environments.

Goldengate 12c features along with Oracle multitenant architecture introduced some changes to the way replication works (see Fig-1). Another interesting fact is that few of Oracle Streams features have now found their way into GoldenGate.

This article describes how to configure Oracle GoldenGate 12c in order to perform a Bi-directional replication between Oracle source and target Container Database 12c. We will be using Integrated Replicat and Integrated Extract since

Integrated mode extraction is the only supported method for Multitenant databases.

Multitenant specificity

Here are some major changes regarding GoldenGate coupled with Oracle Database 12c multitenant architecture:

- One Extract process can be configured to capture changes from multiple Pluggable Databases (same redo stream is shared by all PDBs ).

- Replicat process can only connect and apply to one Pluggable Database (PDB).

- Extract must operate in integrated capture mode (only supported mode for multitenant DBs), a log mining server is involved and this is only accessible from the root container (CDB$ROOT).

- We have to connect as a common user to attach to the logminer on the server.

Fig1- Capture and apply process from multiple PDBs (integrated mode).

What will be covered ?

In this post we will focus on the following main steps:

I. GoldenGate Environment Configuration

II. Initial load

III. Apply change data

IV. Bi-directional replication

I. GoldenGate Environment configuration Go to Top⭡

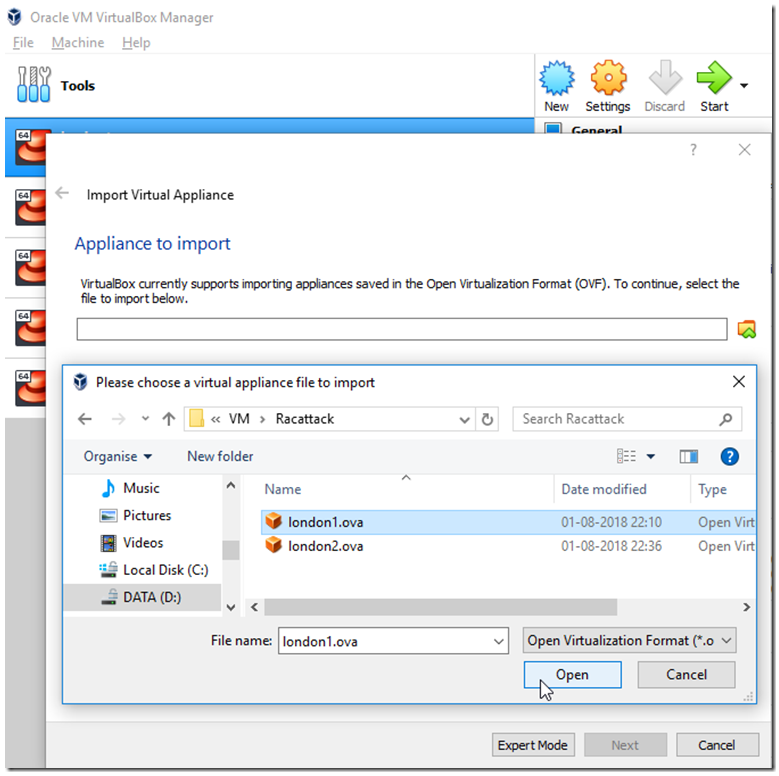

My lab is based on the below source and target systems. Both contain similar Pluggable database called ”PDB” Fig2- Summary of the source and target systems

A- Installation

GoldenGate installation is pretty straightforward. Make sure you specify the right GGate and database homes

Note : If there is only one GoldenGate installation for multiple DBs you will have to set those variables in the extract

and replicat processes directly as below :

SETENV (ORACLE_HOME = "path to Oracle home location")

SETENV (ORACLE_SID = "SID")

B-Preparation Go to Top⭡

An extract process for a multitenant database must be created at the root container level with a "common" database

user and must be defined to run in the "integrated" capture mode. Replicats, on the other hand, must be created at

the pluggable database level and can be defined to run in either the "classic" or "integrated" modes.

1. On the source system (MONTREAL)

» Create source OGG admin User

- Assign the correct source privileges to the GoldenGate admin user as shown below@MTL> CREATE user c##gg_admin identified by gg_adminin ;

@MTL> ALTER USER gg_admin quota unlimited ON users;

@MTL> GRANT CREATE SESSION,CONNECT,RESOURCE,ALTER SYSTEM, select any dictionary,FLASHBACK ANY TABLE to c##gg_admin container=all;@MTL> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(grantee=>'c##gg_admin', container=>'all',privilege_type=>'CAPTURE',grant_select_privileges => TRUE,

do_grants => TRUE,grant_optional_privileges=>'*'); -- minimum privs-- If you want both Capture(extract) and apply(replicat) privileges

@MTL>

EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE('C##GG_ADMIN',container=>'ALL');

SQL> grant dba to c##ggadmin container=all; –- optional

» Add supplemental log data for update operations

@MTL> ALTER USER c##gg_admin set container_data=all container=current;

@MTL> GRANT ALTER any table to c##gg_admin container=ALL; --"ADD TRANDATA"

@MTL> ALTER SYSTEM SET ENABLE_GOLDENGATE_REPLICATION=TRUE SCOPE=BOTH;

@MTL> ALTER DATABASE FORCE LOGGING;

@MTL> ALTER SESSION SET CONTAINER=PDB;

@MTL> ALTER PLUGGABLE DATABASE ADD SUPPLEMENTAL LOG DATA; -- minimum logging

2. On the TARGET system (TORONTO)

» Create target OGG admin User

@TOR> CREATE user c##gg_admin identified by gg_adminin ;

@TOR> ALTER USER gg_admin quota unlimited ON users;

@TOR> GRANT unlimited TABLESPACE TO c##gg_admin container=all; –sometimes needed

@TOR> ALTER SYSTEM SET ENABLE_GOLDENGATE_REPLICATION=TRUE SCOPE=BOTH;

- Assign the right target privileges to the GoldenGate admin user as shown below

@TOR> GRANT create session,CONNECT,RESOURCE,ALTER SYSTEM,select any dictionary to c##gg_admin container=all;@TOR> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE(grantee=>'c##gg_admin', container=>'all',privilege_type=>'APPLY',grant_select_privileges => TRUE,do_grants => TRUE,grant_optional_privileges=>'*',

container='PDB'); -- minimum privs

@TOR> grant dba to c##ggadmin container=all;-- If you want both Capture(extract) and Apply(replicat) privileges on all PDBs (Bidirectional)

@TOR>

EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE('c##gg_admin',container=>'ALL');

II. Initial Load Go to Top⭡

This is basically the first stage where the extract captures data directly from source tables and without using trail files.

Important: First make sure that both source and target PDB open state is permanent before setting up any replication

@SQL> ALTER SESSION SET CONTAINER=PDB; --- on Both src/target

@SQL> ALTER PLUGGABLE DATABASE OPEN;

@SQL> ALTER PLUGGABLE DATABASE SAVE STATE;

A- Prepare the target Tables

» (Re)Create an empty sample schema “SCOTT” on the target PDB (download script here)

@TOR> ALTER SESSION SET CONTAINER=PDB;

@TOR> @scott_empty.sql

Note : You can run this script @scott.sql in the source PDB if SCOTT schema is not yet created

B- Configuration

1. On the source system (MONTREAL)

» Configure OGG User alias and schema logging

oracle@montreal:$GG_HOME# ggsci

GGSCI > DBLOGIN USER c##gg_admin@MTL password ggadmin

GGSCI(CDB$ROOT)> ALTER credentialstore add user c##gg_admin@MTL alias gg_adminGGSCI(montreal/CDB$Root)> add schematrandata pdb.scot --log extra columns

Note : This lab assumes that tns aliasses have been created for both source and target database and PDBs

@MTL= montreal , @TOR=toronto , @PDB= PDB in each server respectively

» Allow incoming access to the Manager process

GGSCI(montreal/CDB$ROOT)> EDIT PARAMS mgr

PORT 7809

accessrule, prog *, ipaddr 192.168.78.55, allow

PURGEOLDEXTRACTS ./dirdat/*, USECHECKPOINTS, MINKEEPFILES 2, FREQUENCYHOURS 6

GGSCI(montreal/CDB$ROOT)> START mgr

» Create an initial Extract parameter file

GGSCI(montreal/CDB$ROOT)> EDIT PARAMS Capture1

extract capture1

useridalias gg_admin

rmthost toronto.evilcorp.com, mgrport 7809

rmttask replicat, group apply1

SOURCEISTABLE

SOURCECATALOG PDB -- Specify source PDB

TABLE SCOTT.* ; -- All tables from Scott schema» Add and register the initial extract with the container database

GGSCI(montreal/CDB$ROOT)> REGISTER EXTRACT Capture1 DATABASE CONTAINER (PDB)

GGSCI(montreal/CDB$ROOT)> ADD EXTRACT Capture1, SOURCEISTABLE

2. On the TARGET system (TORONTO)

» Add OGG User alias PDB level (can be run from root CDB too)

oracle@toronto:$GG_HOME# ggsci

GGSCI> DBLOGIN USER c##gg_admin@PDB password ggadminPDB

GGSCI(toronto)>ALTER credentialstore add user c##gg_admin@PDB alias gg_adminPDB

» Allow incoming access to the Manager process

GGSCI(toronto/CDB$ROOT)> EDIT PARAMS mgr

PORT 7809

accessrule, prog *, ipaddr 192.168.78.54, allow

PURGEOLDEXTRACTS ./dirdat/*, USECHECKPOINTS, MINKEEPFILES 2, FREQUENCYHOURS 6

GGSCI(toronto/CDB$ROOT)> START mgr

» Create a Replicat parameter file

GGSCI (toronto/PDB)> EDIT PARAMS apply1

replicat apply1

useridalias gg_adminPDB

HANDLECOLLISIONS

SOURCECATALOG PDB

map PDB.SCOTT.*, target PDB.SCOTT.*;

» Add the integrated replicat

<GGSCI(toronto/PDB)> ADD REPLICAT apply1, SPECIALRUN

» Start the extract process on the source system

<GGSCI(montreal/CDB$ROOT)> START EXTRACT capture1

This will allow target scott schema to be synchronized in few seconds .

Note: In case datatypes weren’t similar, a definition file is required to handle the mapping using DEFGEN utility

III. Apply Change Data Go to Top⭡

Extract process will pull data from the PDB and send it across the network to the target system. Once data is

written to target trail files, the integrated replicat will convert it to LCRs which will be applied to target PDB by the

inbound server.

Fig4- Integrated Replicat

Fig4- Integrated Replicat

A- Configuration

1. On the source system (MONTREAL)

» Create Integrated Primary extract

<GGSCI(montreal/CDB$ROOT)> edit params cap_scot

EXTRACT Cap_scot

UserIdAlias gg_admin

LOGALLSUPCOLS -- Capture before image of supplementally logged columns

UPDATERECORDFORMAT COMPACT -- Combines before/after images of UPDATES in trail

DISCARDFILE ./dirrpt/cap_scot.dsc,purge

EXTTRAIL ./dirdat/es

SOURCECATALOG PDB

TRANLOGOPTIONS EXCLUDETAG 00 -- To avoid extracting incoming replicated data

DDL INCLUDE MAPPED OBJNAME SCOTT.*

TABLE PDB.SCOTT.*;

Note : TRANLOGOPTIONS is only required for bidirectional replication . I added it in purpose here.

» Add and register the integrated extract with the container database

<GGSCI(montreal)> REGISTER EXTRACT cap_scot DATABASE CONTAINER(PDB)

<GGSCI(montreal)> ADD EXTRACT cap_scot,INTEGRATED TRANLOG, begin now

» Create a trail for the Extract group and start it

<GGSCI(montreal)> ADD EXTTRAIL ./dirdat/es , EXTRACT cap_scot

<GGSCI(montreal)> START EXTRACT cap_scot

» Create a SECONDARY EXTRACT DATA PUMP (recommended).

This is an optional component for our primary extract helping to move the trail over the network.

<GGSCI(montreal)> edit params e_pump

EXTRACT e_pump

PASSTHRU

PASSTHRUMESSAGES

RMTHOST toronto.evilcorp.com ,MGRPORT 7809

RMTTRAIL ./dirdat/rp

TABLE PDB.SCOTT.* ;

» Create and start a data pump extract

<GGSCI(montreal)> ADD EXTRACT e_pump, EXTTRAILSOURCE ./dirdat/es, BEGIN NOW <GGSCI(montreal)> ADD RMTTRAIL ./dirdat/rp, extract e_pump

<GGSCI(montreal)> START EXTRACT e_pump

2. On the Target system (TORONTO)

» Create a Replicat parameter file

<GGSCI(TORONTO)> edit params rep_scot

replicat rep_scot

useridalias gg_adminPDB discardfile ./dirrpt/rep_scot.dsc, append

discardrollover on sunday

SOURCECATALOG PDB

HANDLECOLLISIONS SCOTT.*

map PDB.SCOTT.*, target PDB.SCOT.*;

Note: No need to create a checkpoint table for the integrated replicat unless Data Guard is configured

» Add and start the integrated replicat

<GGSCI(TORONTO)> ADD REPLICAT rep_scot,integrated exttrail ./dirdat/rp

<GGSCI(TORONTO)> START rep_scot

- Remote trail prefix should be the same as specified in the data pump parameter file (rp)

» We can now verify that the data is replicated to the target by performing a little update on the source database

Scott@PDB:Montreal> Update salgrade set HISAL=1000000 where grade=5;

Scott@PDB:montreal> Commit;

-- Check the rows in target Database

Scott@PDB:Toronto> Select * from salgrade;

GRADE LOSAL HISAL

---------- ---------- ----------

1 700 1200

2 1201 1400

3 1401 2000

4 2001 3000

5 3001 1000000 ---> update replicated (initial value 9999)

IV. BI-Directional Replication (active-active) Go to Top⭡

When implementing a bidirectional configuration, you must consider the following areas to avoid data integrity issues:

• Loop detection ( ping pong data behavior)

• Conflict detection and resolution (CDR) when same column is modified at a same time on both systems

• Oracle Triggers (Replicat data triggering DML operations on target system)

Golengate12c already handles the above issues as

- EXCLUDETAG parameter ignores replicat redo stream during the extraction (No Loops)

- LOGALLSUPCOLS parameter supports CDR

- Integrated Replicat doesn’t require disabling dml triggers on the target system.

A- Configuration

As Extract and Replicat processes will be on both servers, I will refer to Montreal as target and Toronto as source.1. On the source system (TORONTO)

» Add supplemental log data for update operations

@TOR> ALTER user c##gg_admin set container_data=all container=current;

@TOR> GRANT ALTER any table to c##gg_admin container=ALL; --"ADD TRANDATA"

@TOR> ALTER DATABASE FORCE LOGGING;

@TOR> ALTER SESSION SET CONTAINER=PDB;

@TOR> ALTER PLUGGABLE DATABASE ADD SUPPLEMENTAL LOG DATA; -- minimum logging

» Configure OGG User alias and schema logging (root level)

GGSCI > DBLOGIN USER c##gg_admin@TOR password ggadmin

GGSCI(CDB$ROOT)> ALTER credentialstore add user c##gg_admin@TOR alias gg_admin

GGSCI(Toronto/CDB$ROOT)> add schematrandata pdb.scott --log extra columns

» Verify that the right source privileges are granted to GoldenGate admin user

» Create Integrated Primary extract-- Run this command if it hasn’t been run yet in the new source(Toronto)

@TOR> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE('C##GG_ADMIN',container=>'ALL');

<GGSCI toronto > edit params to_scot

EXTRACT to_scot

UserIdAlias gg_admin

LOGALLSUPCOLS -- Capture before image of supplemental log columns

UPDATERECORDFORMAT COMPACT -- Combines before/after images of UPDATES in trail

DISCARDFILE ./dirrpt/to_scot.dsc,purge

EXTTRAIL ./dirdat/et

SOURCECATALOG PDB

TRANLOGOPTIONS EXCLUDETAG 00 -- Avoid extracting incoming replicated data

DDL INCLUDE MAPPED OBJNAME SCOTT.*

DDLOPTIONS REPORT

TABLE PDB.SCOTT.*;

» Add and register the integrated extract with the container database

<GGSCI(toronto)> REGISTER EXTRACT to_scot DATABASE CONTAINER(PDB)

<GGSCI(toronto)> ADD EXTRACT to_scot,INTEGRATED TRANLOG, begin now

» Create a trail for the Extract group and start it

<GGSCI (toronto)> ADD EXTTRAIL ./dirdat/et , EXTRACT to_scot

<GGSCI (toronto)> start extract to_scot

» Create a SECONDARY EXTRACT DATA PUMP

Optional but why not :).

<GGSCI(toronto)> Edit params to_epump

EXTRACT to_epump

PASSTHRU

PASSTHRUMESSAGES

RMTHOST montreal.evilcorp.com ,MGRPORT 7809

RMTTRAIL ./dirdat/rm

TABLE PDB.SCOTT.* ;

» Create and start a data pump extract

<GGSCI(toronto)> ADD EXTRACT to_epump,EXTTRAILSOURCE ./dirdat/et, BEGIN NOW

<GGSCI(toronto)> ADD RMTTRAIL ./dirdat/rm, extract to_epump

<GGSCI(toronto)> start extract to_epump

2. On the Target system (MONTREAL)

» Verify that the right source privileges are granted to GoldenGate admin user

-- Run the below commands if it hasn’t been run yet in the new target(Montreal)

@TOR> EXEC DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE('C##GG_ADMIN',container=>'ALL');

SQL> grant dba to c##ggadmin container=all;» Create an OGG admin User (PDB level)

<GGSCI(montreal)> ALTER credentialstore add user c##gg_admin@PDB alias gg_adminPDB

» Create a Replicat parameter file

<GGSCI(gg_admin@montreal)> EDIT params r_mscot

replicat r_mscot

useridalias gg_adminPDB

discardfile ./dirrpt/rep_mscot.dsc, purge, megabytes 10

discardrollover on sunday

HANDLECOLLISIONS

map PDB.SCOTT.*, target PDB.SCOTT.*;

» Add and start the integrated replicat

<GGSCI(gg_admin@montreal)> ADD REPLICAT r_mscot,integrated exttrail ./dirdat/rm

<GGSCI(gg_admin@montreal)> START r_mscot

- Remote trail prefix should be the same as specified in the data pump parameter file (rm)

B- Test & Monitoring Go to Top⭡

» Let’s verify if data is now replicated in both directions via an insert on the new source and a DDL on target PDB

- 1) From TOR to MTL

Scott@PDB:Toronto> Insert into DEPT values(50,'MARKETING','LOS ANGELES');

Scott@PDB:Toronto> Commit;

-- Check the rows in target Database

Scott@PDB:Montreal> Select * from SALGRADE;

DEPTNO DNAME LOC

---------- -------------- -------------

10 ACCOUNTING NEW YORK

20 RESEARCH DALLAS

30 SALES CHICAGO

40 OPERATIONS BOSTON

50 MARKETING LOS ANGELES --> new row replicated

- 2) From MTL to TOR

Scott@PDB:Montreal> alter table BONUS add (Bonus_date date);

-- check

Scott@PDB:Toronto> DESC BONUS

Name Type

----------------- ------------

ENAME VARCHAR2(10)

JOB VARCHAR2(9)

SAL NUMBER

COMM NUMBER

BONUS_DATE DATE

» Last but not least : Here are few GGSCI commands that help monitor the status of the replication processes.

Go to Top⮉-- Info related command

<GGSCI> Info [extract/replicat/Mgr/extrail|rmtrail path ] ,detail

-- Status and reports

GGSCI(montreal/CDB$ROOT)> stats/status [extract/replicat/mgr]

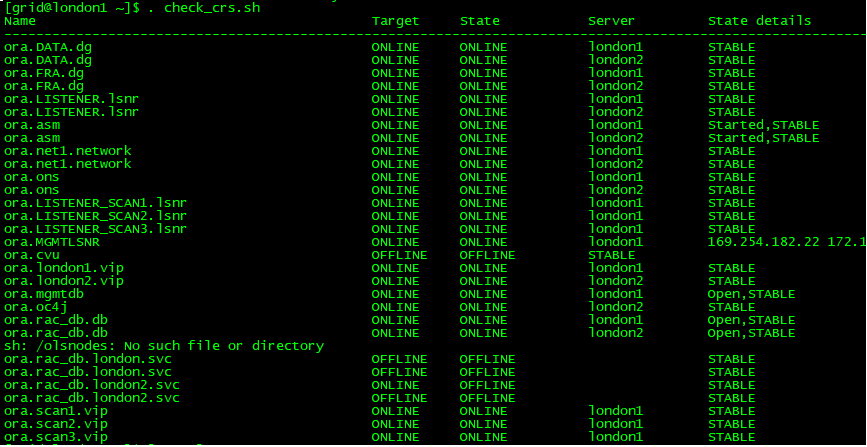

GGSCI(montreal/CDB$ROOT)> view report [Process]<GGSCI(montreal/CDB$ROOT)> info all

Program Status Group Lag at Chkpt Time Since Chkpt

----------- ----------- ----------- ------------- -----------------

MANAGER RUNNING

EXTRACT RUNNING CAP_SCOT 00:00:04 00:00:08

EXTRACT RUNNING E_PUMP 00:00:00 00:00:05

REPLICAT RUNNING R_MSCOT 00:00:00 00:00:02<GGSCI(Toronto/CDB$ROOT)> info all

Program Status Group Lag at Chkpt Time Since Chkpt

----------- ----------- ----------- ------------- -----------------

MANAGER RUNNING

EXTRACT RUNNING TO_EPUMP 00:00:00 00:00:09

EXTRACT RUNNING TO_SCOT 00:00:10 00:00:08

REPLICAT RUNNING REP_SCOT 00:00:00 00:00:05

<GGSCI(montreal/CDB$ROOT)> info CAP_SCOT --> Extract A

EXTRACT CAP_SCOT Last Started 2019-09-07 17:59 Status RUNNING

Checkpoint Lag 00:00:05 (updated 00:00:03 ago)

Process ID 20232

Log Read Checkpoint Oracle Integrated Redo Logs

2019-09-07 18:08:17

SCN 0.2590348 (2590348)<GGSCI(Toronto/CDB$ROOT)> info REP_SCOT --> Replicat A

REPLICAT REP_SCOT Last Started 2019-09-07 17:59 Status RUNNING

INTEGRATED

Checkpoint Lag 00:00:00 (updated 00:00:09 ago)

Process ID 20244

Log Read Checkpoint File /u01/app/oracle/product/12.3/ogg/dirdat/rp000000003

2019-09-07 17:59:29.594319 RBA 1636

<GGSCI(Toronto/CDB$ROOT)> info TO_SCOT --> Extract B

EXTRACT TO_SCOT Last Started 2019-09-07 18:00 Status RUNNING

Checkpoint Lag 00:00:10 (updated 00:00:03 ago)

Process ID 20270

Log Read Checkpoint Oracle Integrated Redo Logs

2019-09-07 18:06:49

SCN 0.2484027 (2484027)<GGSCI(montreal/CDB$ROOT)> info R_MSCOT --> Replicat B

REPLICAT R_MSCOT Last Started 2019-09-07 18:01 Status RUNNING

INTEGRATED

Checkpoint Lag 00:00:00 (updated 00:00:01 ago)

Process ID 20309

Log Read Checkpoint File /u01/app/oracle/product/12.3/ogg/dirdat/rm000000005

2019-09-07 18:00:16.975866 RBA 1632